For decades, compliance has demanded extensive manual work. Consider a typical access review: after user permissions are provisioned or revoked, compliance teams must manually confirm that changes were authorized, documented, and correctly executed. Change management, policy reviews, and document control have similarly required labor-intensive checks after the fact, creating operational costs and bottlenecks to business operations.

AI is shifting this landscape from reactive manual reviews to proactive, continuous oversight. AI-enabled systems can monitor access changes in real-time, identify unusual patterns, compare changes against policy, and surface potential issues as they occur. While final decisions remain with compliance professionals, much of the routine work is handled through intelligent automation and pre-filtered insights, allowing human experts to focus on complex or high-risk decisions.

For organizations, AI reduces both administrative burden and oversight risk by detecting anomalies earlier and embedding compliance into daily workflows.

What is AI compliance?

AI compliance refers to ensuring that artificial intelligence systems and their applications adhere to relevant laws, regulations, standards, and ethical guidelines. This includes making sure AI systems operate within legal frameworks, respect privacy, maintain security, avoid bias, and function as intended while minimizing risks. AI compliance encompasses both compliance of AI systems themselves and how AI can be used to enhance broader organizational compliance activities.

Why is AI compliance important?

AI compliance is crucial because it:

- Reduces legal and regulatory risks as AI systems increasingly face scrutiny

- Builds trust with customers, partners, and regulators

- Prevents potential harm from biased or AI systems that are not secure

- Enables organizations to deploy AI confidently while meeting regulatory requirements

- Transforms compliance from a reactive burden to a proactive business enabler

- Helps maintain competitive advantage in markets where AI is becoming standard

AI in documentation and due diligence

A significant challenge in compliance—particularly for companies scaling operations or pursuing new partnerships—is completing large numbers of due diligence questionnaires (DDQs). These often contain framework-specific questions referencing an organization’s policies, procedures, and audit evidence. Historically, completing DDQs required searching through policy documents, audit reports, and prior questionnaires, resulting in duplicated effort, outdated responses, and delays.

AI transforms this process. Generative AI models integrated into compliance platforms can cross-reference an organization’s policy repository, certifications, and audit materials. When new DDQs arrive, AI systems review existing documentation to produce accurate, current draft answers, highlighting relevant excerpts and surfacing evidence automatically.

For example, when a fintech company receives questions about data encryption protocols in different DDQs, the AI system retrieves the latest encryption policy, relevant SOC 2 report sections, and cross-references previous answers to present a pre-drafted, current response. Compliance managers need only approve, refine, or update as needed, eliminating hours of manual review.

This approach saves time and reduces the risk of inconsistent, outdated, or incorrect answers.

Improving audit readiness and execution

Traditional audits require companies to provide extensive information—unstructured data including screenshots, change logs, email communications, and configuration files. Auditors then review this material to find evidence that controls were followed and identify potential compliance gaps.

AI integration transforms both internal and third-party audits. AI-powered tools can scan and analyze unstructured data, recognize patterns of compliant versus non-compliant behavior, and present auditors with focused alerts or evidence packages.

For a healthcare organization preparing for a HIPAA audit, compliance staff previously compiled hundreds of email chains about health record access requests, along with sample logs and policy updates. An AI system can now automatically highlight access permission deviations, correlate email approvals with system changes, and summarize exceptions requiring auditor attention.

This speeds audit cycles and enables “real-time attestation”—where internal stakeholders and external partners can receive current compliance evidence without waiting for annual reports. For instance, users can verify that their data will be encrypted at entry, with AI systems confirming real-time security status rather than relying on months-old certifications.

Navigating dynamic regulations with AI

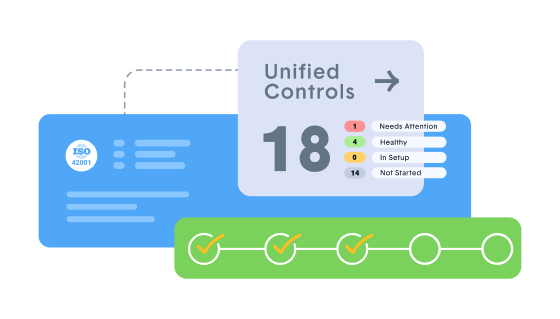

As AI adoption increases and new applications like generative models emerge, regulations are evolving rapidly. Standards such as the EU AI Act and ISO 42001 address technical security controls, responsible data use, copyright, bias, and machine learning model provenance and training.

AI can help organizations track, interpret, and implement regulatory changes. When new regulatory updates change model transparency requirements, an AI-powered compliance system can:

- Summarize regulations in plain language, noting differences from previous versions

- Map new obligations to existing internal policies and identify gaps requiring remediation

- Draft recommendations or policy updates for compliance team review and implementation

For an insurance company operating across jurisdictions, an AI engine can review European regulatory updates concerning data retention for AI-driven decisions, compare requirements with current retention policies, and notify compliance officers if retention periods need adjustment to avoid violations.

This capability is essential for cost-effective regulatory adaptation and reducing inadvertent non-compliance risk as regulations continue changing.

What is the compliance standard for AI?

Multiple standards are emerging for AI compliance, including:

- The EU AI Act, which categorizes AI applications by risk level

- ISO 42001 for AI management systems

- Industry-specific frameworks like those for healthcare AI or financial services

- Responsible AI frameworks addressing ethics, transparency, and bias

- NIST’s AI Risk Management Framework

- Organization-specific standards for model governance and data usage

These standards focus not only on technical security controls but also on responsible data use, copyright, bias, and the provenance and training of machine learning models.

Does the US have AI regulations?

The US currently takes a sector-specific approach to AI regulation rather than implementing comprehensive AI-specific legislation like the EU. Regulations affecting AI come from:

- Federal agencies’ guidance (FDA for medical AI, FTC for consumer protection)

- Executive Orders on AI safety and security

- State laws like the California Consumer Privacy Act that impact AI data usage

- Industry-specific regulations in healthcare, finance, and other sectors

- Existing laws applied to AI contexts (discrimination, privacy, consumer protection)

The landscape is evolving rapidly, with several federal initiatives working toward more coordinated approaches to AI governance and oversight.

Elevating the role of compliance professionals

A recurring compliance challenge has been high turnover of skilled professionals, partly due to the historically administrative or reactive nature of their work. With AI handling repetitive review and documentation tasks, compliance officers can move into strategic roles—interpreting complex regulatory requirements, designing controls, engaging with regulators, and advising business leaders on risk.

In a multinational technology enterprise, AI can automate supplier due diligence documentation collection and initial review while flagging ambiguous cases (such as non-standard security controls implemented by third-party vendors) for expert human judgment. This targeted focus adds organizational value, reduces burnout from repetitive manual work, and elevates compliance as a business enabler.

Data analysis, risk prioritization, and operational efficiency

AI provides significant advantages in compliance through large-scale data analysis for risk management. By analyzing historical trends and continuously monitoring transaction logs, access records, or customer interactions, AI systems can identify patterns and anomalies.

For a global retail chain handling payment card data, AI can continuously review payment processing logs for patterns historically associated with data breaches or regulatory violations—such as repeated failed logins, after-hours access, or data exfiltration attempts. Rather than overwhelming compliance staff with low-value alerts, the system prioritizes issues most indicative of actual risk and possible compliance failures.

This precision reduces false positives, improves organizational risk awareness, and ensures compliance resources are allocated where they matter most.

What are AI tools for regulatory compliance?

AI tools for regulatory compliance include systems that:

- Monitor access changes in real-time and flag unusual patterns

- Cross-reference an organization’s policy repository to produce accurate responses for due diligence questionnaires

- Scan and synthesize unstructured data for audit preparation

- Track, interpret, and operationalize regulatory changes

- Analyze large-scale data for risk management and prioritization

- Test AI systems for security vulnerabilities specific to AI models

- Provide continuous oversight rather than periodic manual reviews

Security testing and the new attack surface created by AI

As organizations deploy AI-powered solutions—such as large language models (LLMs) for customer support or document summarization—they introduce new potential attack vectors. Beyond traditional penetration testing, specialized assessments are needed to identify how prompt engineering or API misuse could extract sensitive information from AI models or trigger unauthorized actions.

For example, when a hospital integrates an LLM-based chatbot to answer patient questions, penetration testers use sophisticated prompts to determine if the chatbot inadvertently reveals private patient data or internal logic not meant for disclosure. AI-specific penetration testing becomes essential for ensuring these technologies do not compromise information security or regulatory compliance.

Purpose-built AI penetration testing can uncover vulnerabilities such as improperly scoped access to internal APIs, data leakage through context windows, or susceptibility to adversarial prompts—addressing risks unique to generative AI adoption in customer-facing workflows.

A human-centric approach to AI in compliance

Despite AI’s efficiencies, the human element remains central. Ultimate responsibility for interpreting complex scenarios, approving sensitive information sharing, and making judgment calls rests with skilled compliance professionals. AI serves as an augmentative tool, providing clarity, automation, and risk prioritization, but never removing the need for human judgment or oversight.

Organizations should use AI to:

- Automate repetitive, low-value tasks and initial document reviews

- Enhance quality, speed, and consistency of compliance processes

- Maintain human oversight for situational interpretation, governance, and final decision-making

- Continuously update AI capabilities as regulations and technologies evolve

The future of AI and compliance

The integration of AI into compliance represents a significant technological and operational shift. Processes once characterized by manual checklists, fragmented evidence gathering, and constant catch-up are becoming proactive, data-driven, and risk-focused compliance programs. AI is not only reducing costs but improving accuracy, transparency, and agility across all sectors.

A software company scaling internationally illustrates this future: AI-enabled compliance systems help the company understand emerging local privacy laws, generate and approve DDQ responses quickly, monitor audit readiness in real time, and proactively identify genuine risks from operational data. Compliance becomes a competitive advantage rather than an innovation constraint—freeing expertise for strategic engagement rather than administrative tasks.

While AI reshapes compliance, its most significant impact is empowering professionals and organizations to engage more effectively with risk, ethics, and proactive governance in the digital era. The next generation of compliance—augmented and accelerated

Related Posts

Stay connected

Subscribe to receive new blog articles and updates from Thoropass in your inbox.

Want to join our team?

Help Thoropass ensure that compliance never gets in the way of innovation.

.png)